Abstract

Perceptual information is important for the meaning of nouns. We present modality exclusivity norms for 485 Dutch nouns rated on visual, auditory, haptic, gustatory, and olfactory associations. We found these nouns are highly multimodal. They were rated most dominant in vision, and least in olfaction. A factor analysis identified two main dimensions: one loaded strongly on olfaction and gustation (reflecting joint involvement in flavor), and a second loaded strongly on vision and touch (reflecting joint involvement in manipulable objects). In a second study, we validated the ratings with similarity judgments. As expected, words from the same dominant modality were rated more similar than words from different dominant modalities; but – more importantly – this effect was enhanced when word pairs had high modality strength ratings. We further demonstrated the utility of our ratings by investigating whether perceptual modalities are differentially experienced in space, in a third study. Nouns were categorized into their dominant modality and used in a lexical decision experiment where the spatial position of words was either in proximal or distal space. We found words dominant in olfaction were processed faster in proximal than distal space compared to the other modalities, suggesting olfactory information is mentally simulated as “close” to the body. Finally, we collected ratings of emotion (valence, dominance, and arousal) to assess its role in perceptual space simulation, but the valence did not explain the data. So, words are processed differently depending on their perceptual associations, and strength of association is captured by modality exclusivity ratings.

Similar content being viewed by others

The meaning of words is grounded in perception and action, as illustrated in recent years by the substantial literature within the embodiment research framework (for a review see Meteyard, Cuadrado, Bahrami, & Vigliocco, 2012). When we read words with strong sensory meanings we recruit the perceptual system to aid in comprehension, reflecting so-called “mental simulation” (e.g., Barsalou, 1999; Glenberg & Kaschak, 2002; Stanfield & Zwaan, 2001). Semantic knowledge of perceptual information has been shown to activate perceptual regions of the brain (Golberg, Perfetti, & Schneider, 2006), including words with strong visual associations (e.g., Simmons et al., 2007), auditory associations (e.g., Kiefer, Sim, Herrnberger, Grothe, & Hoenig, 2008), olfactory associations (Gonzalez et al., 2006), and gustatory associations (Barros-Loscertales et al., 2012).

If understanding words activates perceptual information gained through experience, then words should activate multiple modalities because our experience of the world is multimodal. For example, the concept “wine” might include the smell of the wine as you place your nose in the glass, and the taste as you take your first sip. But there will also be perceptual information from other modalities, such as the color of the wine, the shape of the glass, and the sound of a bottle of wine opening. Behavioral evidence suggests that words can simultaneously activate perceptual content in more than one modality. For example, words that imply fast or slow motion interact with the perceptual depiction of fast and slow motion in both the visual modality (lines moving quickly or slowly) and the auditory modality (the sound of fast and slow footsteps) (Speed & Vigliocco, 2015). This is evidence that mental simulation during word processing is multimodal.

The multimodal composition of word meanings has been supported by modality ratings of concept-property relations, adjectives, nouns, and verbs in English too (Lynott & Connell, 2009, 2013; van Dantzig, Cowell, Zeelenberg, & Pecher, 2011; Winter, 2016). Native English speakers asked to judge words according to how strongly they experienced a concept in the visual, auditory, tactile, gustatory, and olfactory modality assign high ratings of experience to more than one modality. Subsequent ratings have been used to predict sound-symbolism in words: perceptual strength was found to be associated with several lexical features including word length, distinctiveness, and frequency (Lynott & Connell, 2013). For example, word length was found to increase with greater auditory strength but decrease with greater haptic strength (Lynott & Connell, 2013). In addition, such modality norms have been used to demonstrate modality-switch costs. Responses in a property verification task are longer when attention needs to be switched between perceptual modalities defined by such ratings (Lynott & Connell, 2009; Pecher, Zeelenberg, & Barsalou, 2003). In the present work, we collected a set of modality exclusivity ratings for Dutch nouns, to enable comparable research to be conducted in Dutch-speaking populations. We validated the norms with ratings of similarity and demonstrated their use with a novel experiment investigating the mental simulation of near and far space for words with perceptual meanings.

Word meaning, perceptual simulation, and space

Mental simulations also include spatial information. On reading the word sun for example, we simulate it as having a high position in space, but a word like snake is instead simulated as being low in space. We simulate such spatial locations based on our real-world experiences with objects. There are a number of experiments providing evidence for the perceptual simulation of spatial features (e.g., Dudschig, Souman, Lachmair, de la Vega, & Kaup, 2013; Estes, Verges, & Barsalou, 2008). Estes et al. (2008) demonstrated simulation of height using a target detection task. Participants were slower to detect a target after reading a word referring to an object typically experienced in the same spatial location as the target object. For example, after reading head, responses were slower to targets presented in the upper part of the screen than lower on the screen. The spatial simulation of the word oriented attention to a specific region of space interfering with processing of the target when it appeared there.

As well as high and low space, another spatial distinction in how objects are experienced is in terms of near or far space. Near and far space are highly salient distinctions. The brain contains separate systems responsible for processing near space (approximately within arm’s reach) and far space (approximately beyond arm’s reach). This is supported for example by the existence of proximal/distal neglect (e.g., Cowey, Small, & Ellis, 1994; Halligan & Marshall, 1991), a neuropsychological disorder where patients are found to ignore near or far space. Further, cross-linguistic research shows many languages make a distinction between near and far space in demonstratives (e.g., this and that) (Diessel, 1999; Levinson et al., in press), suggesting the psychological salience of this spatial distinction (see also Kemmerer, 1999). Winter and Bergen (2012) have shown spatial distance is actually simulated during sentence comprehension. They found participants were faster to respond to large pictures and loud sounds after reading sentences describing objects near in space rather than far in space (e.g., You are looking at the milk bottle in the fridge/across the supermarket), and faster to respond to small pictures and quiet sounds after sentences describing objects that are far rather than near. So, language comprehenders represent the distance of objects from linguistic information.

Near and far space are also important for the distinction between the perceptual modalities. We experience objects with our five major senses: visual, auditory, haptic, gustatory, and olfactory. The way we interact with these senses in terms of space differs, however. The senses have traditionally been categorized into proximal versus distal. Cytowic (1995) argued the distinction between “near” perception (including touch and chemosensation, i.e., taste and smell) and “distant” perception (i.e., seeing and hearing), is consistent with classical neurology and neuroanatomy. Similarly, in linguistics and anthropology, haptics, gustation and olfaction are considered “proximal” senses, whereas vision and audation are considered “distal” senses (Howes, 2003; Majid & Levinson, 2011; San Roque et al., 2015).

In a way, an individual could be considered a “mental location” to which specific mental states or effects are directed (Landau, 2010). Relevant perceptual information can then be represented in relation to this location. We can see or hear things at a distance, i.e., in distal space, removed from the body. However, things we touch or taste can only be perceived when in contact with the body, i.e., in our proximal space. Although olfaction does not require contact with the body, evidence suggests we use the olfactory sense mainly at short distances; olfactory perception is challenged at greater distances (Neo & Heymann, 2015).

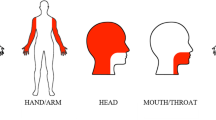

Support for the representation of perceptual modalities in terms of near and far space also comes from sign languages, where signs for smell, taste, and touch occur closer to, or make contact with, the body, whereas signs for see and hear typically use space further from the body (see Fig. 1 for examples from British Sign Language).Footnote 1

If specific spatial information is associated with perceptual modalities, do we mentally simulate distance for nouns with strong modality associations? Previous work shows objects can be simulated in near and far space based on their described location (Winter & Bergen, 2012), but can the simulation of near and far space also be affected by the postulated differences in perceptual modalities described above? If so, then objects strongly experienced in the visual modality (e.g., traffic light) should be perceptually simulated in the distance, as should objects strongly experienced in the auditory modality (e.g., thunder). On the other hand, objects strongly experienced by touch (e.g., satin), taste (e.g., steak), and smell (e.g., cinnamon), should be perceptually simulated in near space.

In order to investigate this idea, we tested for spatial simulation using a lexical decision task with words presented as “near to the body” or “far from the body.” We used presentation size to imply distance (as in Winter & Bergen, 2012): words in the near condition had a larger font than words in the far condition. We also presented words as high or low on screen to enhance this distance through perspective. We predicted proximal words (words dominant in haptics, gustation, and olfaction) should be processed faster in near space, whereas distal words (words dominant in vision and audition) should be processed faster in far space.

In what remains, we first describe the procedure for collecting the Dutch modality exclusivity norms and report findings from the modality ratings (following Lynott & Connell, 2013). This is followed by Study 2, where we establish the validity of the norms by conducting a similarity rating experiment. If modality ratings reflect true perceptual similarities, we expect words from the same dominant modality will be rated as more similar than words from different dominant modalities. We then present the procedure and results of Study 3, the lexical decision experiment testing whether perceptual modalities are differentially represented in space. Finally, in order to investigate the possibility that differences between perceptual modalities are driven by emotion associations, we collect valence ratings in Study 4.

Study 1: Modality ratings

Method

Participants

Forty-six participants (11 male, average age 23, SD = 4.3) signed up for the ratings task through the Radboud University research participant system and were sent a link to complete a Qualtrics survey. All participants were native speakers of Dutch. Participants were paid €5 in shopping vouchers for their participation.

Materials

Initially, lists of 100 Dutch nouns for each modality (visual, auditory, haptic, gustatory, olfactory) were created. We aimed to find an equal number of words with strong perceptual associations for each modality. To do this, we consulted the stimuli list of Lynott and Connell (2013) as well as that of Mulatti et al. (2014) for words with sounds associations, Barros-Loscertales et al. (2012) for words with gustatory associations, and Gonzalez et al. (2006) for words with olfactory associations. Despite our best attempts to curate equal numbers of words for each modality, some words overlapped between the olfactory and gustatory modality, leading to a list of 485 words in total. Three separate lists were randomly created to be rated by participants using a Qualtrics survey (Qualtrics, Provo, UT, USA).

Procedure

Participants were presented with each word separately and asked to rate to what extent the meaning of the word could be experienced in each perceptual modality, in the following order: by feeling through touch, hearing, seeing, smelling, and tasting, on a scale of 0 (not at all) to 5 (greatly) (following Lynott & Connell, 2009 and Lynott & Connell, 2013). For each modality participants had to click on their chosen value to make their response. Participants were instructed to leave a word unrated if they were unsure of the meaning of the word. See Appendix A for a screenshot of the instructions and procedure.

Results

Three percent of trials in the survey were skipped. Overall, words were rated as primarily experienced in the visual modality, and experienced least in the auditory modality. Mean perceptual strength ratings for each modality are displayed in Table 1. The greatest number of words was found to be dominated by vision and the smallest number by olfaction (see Table 2). So, despite our deliberate choice of words thought to strongly reflect each modality, olfaction appears to be relatively unimportant for the meaning of these words. Five words were equally dominant in more than one modality (garnaal ‘shrimp’, rubber, chai, watjes ‘cotton balls’, injectienaald ‘injection’). Table 2 also displays the average modality exclusivity score for words dominant in each perceptual modality. This is the degree to which a concept can be mapped onto one single perceptual modality (see Lynott & Connell, 2009). It is calculated by dividing the range of ratings by the sum, as in the formula below, where M is a vector of mean ratings for each of the five perceptual modalities:

A modality exclusivity value of 0 would reflect a fully multimodal concept, whereas a modality exclusivity value of 100% would reflect an entirely unimodal concept. Maan (moon) had the highest modality exclusivity score of 100%, being exclusively experienced with vision, and kip (chicken) was the most multimodal, with a modality exclusivity score of 13%.

Overall, words had an average modality exclusivity score of 47%. We note that this is close to the average modality exclusivity score Lynott and Connell (2009) observed with a set of adjectives (46.1%). In Lynott and Connell (2013), however, the authors argue that nouns are more multimodal than adjectives, based on an average modality exclusivity of 39.2% for their set of nouns. In the present study and in Lynott and Connell (2009) words were selected with the intention of sufficiently covering the five perceptual modalities, whereas in Lynott and Connell (2013) words were randomly selected. This raises the question of whether differences in item sampling leads to differences in modality exclusivity. Although vision has a higher modality exclusivity score compared to the other perceptual modalities (52%), its modest value suggests the meanings of the words tend to be fairly multimodal in general.

Correlations were conducted between each modality (Table 3). As expected, olfactory and gustatory ratings were strongly correlated with each other, which reflects their association to each other in flavor. Auditory, haptic, and visual ratings were negatively correlated with gustatory and olfactory ratings, and haptic and visual ratings were positively correlated with each other.

To look at the structure of the modality space we used factor analysis with principal component extraction and Varimax rotation using SPSS. Two components were extracted based on the scree plot and eigenvalues, accounting for 68.33% of variance of the data (Fig. 2). The first component, accounting for 44.45% of the variance, had strong positive loadings from gustatory and olfactory ratings, and strong negative loadings from auditory ratings. This likely reflects the items selected for their strong gustatory and olfactory properties were primarily food and drink products for which auditory information is not a salient conceptual feature. The second component, accounting for 23.88% of the variance, had strong positive loadings from haptic and visual ratings but weak and negative loadings from the ratings in the other three modalities. This is likely to reflect the ecological coupling of vision and touch: many objects that can be touched can also be seen (Louwerse & Connell, 2011, 2013).

Discussion

In line with the modality ratings for adjectives and nouns collected by Lynott and Connell (2009, 2013), the present ratings reflect the multimodality of concepts. In addition, we replicated a visual dominance effect (Lynott & Connell, 2009, 2013; van Dantzig et al., 2011; Winter, 2016) with Dutch speakers. However, in comparison with Lynott and Connell (2013) who found gustation was the least dominant sense for English nouns, we found the least dominant sense for this set of Dutch words was olfaction. Furthermore, Lynott and Connell (2013) found words dominant in audition had the highest modality exclusivity scores and words dominant in olfaction had the lowest, but here words dominant in the visual modality had the highest modality exclusivity scores and words dominant in the gustatory modality had the lowest modality exclusivity scores instead. However, as mentioned previously, the present set of nouns was specifically selected to cover the five perceptual modalities, so cannot be taken to reflect Dutch nouns in general. In comparison, Lynott and Connell’s (2013) nouns were randomly selected.

Using factor analysis, we found olfaction and gustation nouns positively loaded onto one factor, possibly reflecting their joint contribution to flavor, while vision and touch positively loaded onto an orthogonal factor, which reflects perhaps their joint involvement in the experience of manipulable objects. Since the words used in the present study were different to those used by Lynott and Connell (2013), the differences may reflect characteristics of the specific words chosen. Nevertheless, both our study and that by Lynott and Connell (2013) find olfaction and gustation are highly correlated, and so “flavor” is possibly the weakest modality in terms of dominance and exclusivity.

Study 2: Similarity ratings task

In order to assess the utility of the collected modality ratings we conducted a similarity rating task for word pairs. By asking participants to compare the similarity of word referents, we can assess whether our modality ratings accurately reflect salient perceptual information. We predicted word pairs from the same dominant modality would be rated as more similar than words from different dominant modalities. Moreover, if ratings are capturing behaviorally relevant meaning components, then word pairs from the same dominant modality with “high strength” should also have higher similarity ratings, whereas word pairs from different dominant modalities with “high strength” should have lower similarity ratings. This follows if modality strength reflects salient conceptual components about word meaning (Table 4).

Method

Participants

Twenty-one native Dutch speakers (15 female, M age = 22.29 years, SD = 4.16) were recruited from the Radboud University Sona system and sent a link to a Qualtrics survey. Participants were paid €5 in shopping vouchers for their participation.

Stimuli

Twenty-four nouns dominant in each modality were selected for the similarity rating task. We chose 24 because this was the lowest even number of items dominant in a single modality (sound). For each modality, nouns were categorized as either “high strength” (n=12) or “low strength” (n=12) based on their modality ratings, and then further subdivided so half of each modality and strength nouns were set as targets, and half as comparisons. Each target noun was paired with a comparison noun in the following conditions: (1) same modality and matching strength, (2) same modality and mismatching strength, (3) different modality and matching strength, or (4) different modality and mismatching strength. Each target noun thus appeared four times, and each comparison noun appeared once in each of the four conditions. In total 240 noun-pairs were created to be rated.

Procedure

The rating task was conducted using Qualtrics. Participants were presented with the word pairs and asked to rate how similar the referents of the two words were, using a scale of 0 to 7. The instructions were given in Dutch: “In hoeverre verwijzen deze woorden naar soortgelijke dingen of concepten?” Participants used their mouse to click on the chosen rating for each word pair.

Results

The “matching strength” category was divided into “high” and “low” strength, to specifically test the prediction that similarity should be higher in the same modality condition when modality ratings are of high strength than low strength. So we conducted a 2 (modality: same vs. different) by 3 (strength: high-same vs. low-same vs. different) within participants ANOVA. There was a main effect of modality F(1, 20) = 132.2, p < .001, η 2 p = .87; strength F(2, 40) = 23.06, p < .001, η 2 p = .54; and, more critically, a significant interaction between them F(2, 40) = 38.35, p < .001, η 2 p = .66. Tests of simple main effects showed similarity ratings for same modality word pairs were higher when the strength of both words was high than when they were both low, p < .001, d = 0.84, or when they mismatched in strength, p < .001, d = 0.72. For different modality words pairs, there was no difference in similarity ratings between pairs with high modality strength and pairs with low modality strength, p = 1, d = 0.10, or mismatch strength, p = .35, d = 0.12. Mean similarity ratings are shown in Fig. 3.

Discussion

Participants judged referents of words to be more similar if they came from the same dominant modality, compared to a different dominant modality. Furthermore, word pairs in the same dominant modality with “high strength” had higher similarity ratings. The similarity ratings therefore support the modality ratings, demonstrating they capture salient perceptual information related to word meaning.

Study 3: Perceptual distance experiment

We used the modality ratings to investigate further the meaning of nouns by examining whether different perceptual modalities have different representations of space. Are the “distal senses” of sight and hearing really represented further away in space than the “proximal senses” of touch, taste, and smell?

Method

Participants

Forty-two participants were recruited from the Radboud University research participant system (nine male, average age 22.88 years, SD = 4.03). Participants were all native speakers of Dutch. Participants were paid €5 in shopping vouchers for their participation.

Materials

All 485 words from the modality ratings were used in the experiment. Words were randomly assigned to one of two experimental lists. An equal number of non-words was generated using the Dutch non-word generator “Wuggy” (Keuleers & Brysbaert, 2010), which ensured non-words were pronounceable and orthographically legal in Dutch.

Procedure

Words were presented at a low (near) or high (far) position onscreen, with a triangular background image creating the illusion of distance (see Fig. 4). Words presented in the low position also had a larger font size (Arial 24) than words presented at the high position (Arial 10), to further create a sense of distance. Each word was presented twice for each participant, once in each spatial position. Participants were instructed to respond with a button box as to whether what was presented was a real Dutch word or not. The experimental procedure lasted around 30 min.

Results

Response time data were analyzed for correct lexical decisions (word or non-word) after removing items with more than one dominant modality (n = 5), and items with overall accuracy less than 70% (n = 33), leaving a total of 447 words. Individual trials were removed if they were outside 2.5 SD of a participant’s mean response time (2.5% of remaining data).

Modality categories

Data were first analyzed using the spatial distinction of “proximal” and “distal.” Words rated as dominant in the visual and auditory modality were categorized as “distal” modalities and words rated as dominant in the haptic, gustatory, and olfactory modality were categorized as “proximal” modalities, based on how these modalities operate in space in everyday experience (see Introduction).

Response times were analyzed with linear mixed effects models in R (R Core Team, 2013), using the lme4 package (Bates, Maechler, Bolker, & Walker 2015) with the fixed factors “word category” (proximal vs. distal) and “presentation distance” (near vs. far) and the interaction between the two. Covariates in the model were Dutch word frequency (Celex, spoken Dutch, and Subtlex) and word length. Items were modelled as random intercepts and participants as random intercepts and slopes.Footnote 2 To assess statistical significance of factors we used likelihood-ratio tests, comparing models with and without the factors of interest (see Winter, 2013). Specification of model comparisons can be found in Appendix B. As predicted, there was a (marginally) significant interaction between word category and presentation distance χ 2(1) = 3.77, p = .052. Distal words were responded to more slowly when presented near than far (see Fig. 5).

In order to examine perceptual modality in more detail, the data were analyzed coding each dominant modality separately. The model was the same as before, including all covariates, but this time “Word Category” had five levels (visual, auditory, haptic, gustatory, olfactory).Footnote 3 Again there was a significant interaction between word category and distance χ 2(4) = 9.74, p = .045. For words rated strongest in the olfactory modality, responses were faster when words were presented near than far. For all other modalities responses were faster when words were presented far rather than near (see Fig. 6). So, out of the “proximal” category, only words dominant in olfaction showed the predicted pattern of facilitated responses in near presentation.

Modality ratings

The modality norms also include continuous variables of modality strength, as well as modality categories. Assessing the response time data in terms of broad categories may be too coarse, losing some of the perceptual detail captured by the reality of continuous variables. We therefore conducted separate linear mixed effects models on response time for perceptual strength ratings in each modality, the interaction between modality rating and distance (near vs. far) as fixed factors, and word length and word frequency as covariates. Each model included all of the words used in the experiment, regardless of dominant modality. As before, likelihood-ratio tests were conducted between models with and without the factor of interest, and the model specification can be found in Appendix B. Ratings of strength for vision, taste, and smell were significant predictors in their respective models: vision χ 2(3) = 52.04, p < .001, taste χ 2(3) = 22.59, p < .001, smell χ 2(3) = 15.79, p < .01. That is, words were responded to more quickly if they were rated high in terms of vision, taste, or smell. There were no other significant effects.

In addition, for each word a total modality score (following Lynott & Connell, 2013) was calculated by summing the ratings for all modalities. A high total score, therefore, reflects that a referent is experienced strongly in perception overall. A linear mixed effect model with total modality score as a fixed factor and word length and word frequency as covariates was conducted on the response time data. Total modality score significantly predicted response time χ2(3) = 74.47, p < .001, with response times faster for words with higher total modality scores. So, a greater number of perceptual associations appears to facilitate lexical access.

Discussion

We found words dominant in olfaction were responded to faster in near presentation than far presentation, whereas the opposite was true for all other modalities. Although this was predicted for odor, we also expected words dominant in taste and touch would follow the same pattern. One possibility for the difference between odor words and words dominant in the other proximal senses, is that odor words are more emotionally valenced. Odor is thought to be strongly tied to emotion due to the close proximity between the olfactory system and the emotion processing system in the brain (see Larsson, Willander, Karlsson, & Arshamian, 2014; Soudry, Lemogne, Malinvaud, Consoli, & Bonfils, 2011, for reviews), and odors are thought to be primarily perceived in terms of their valence (e.g., Yeshurun & Sobel, 2010). It is possible, therefore, that words with strong olfactory information are also loaded with more emotional information (although, note, this has also been suggested for taste; see Krifka, 2010; Winter, 2016). Emotion, being somewhat personal and associated with the body, may be processed more efficiently when close to the body. To investigate this idea, we collected emotion ratings for our set of nouns.

Study 4: Emotion ratings

Above we suggested that one explanation for why odor words were facilitated in near presentation, but touch and taste words were not is that odor words may be more emotionally valenced. In order to test this possibility, we collected ratings of emotion in terms of valence/pleasantness, activity/arousal, and power/dominance following Moors et al. (2007). The three variables are thought to reflect different components of emotion and mood: Valence judgments reflect whether the word refers to something positive or negative; arousal judgments reflect whether the word refers to something arousing or calming; and dominance judgments reflect whether a word refers to something weak or dominant.

Method

Participants

Twelve native Dutch speakers (ten female, M age = 23.17 years, SD = 6.75) were recruited from the Radboud University Sona system and sent a link to a Qualtrics survey. Participants were paid €5 in shopping vouchers for their participation.

Stimuli and procedure

All 485 items from the original norming study were used. The task was conducted using Qualtrics. Participants were presented with each word and asked to rate it on three dimensions with a 7-point Likert scale, following Moors et al. (2013): (1) To what extent does the word refer to something that is positive/pleasant or negative/unpleasant? (1 = very negative/unpleasant, 7 = very positive/pleasant), (2) To what extent does the word refer to something that is active/arousing or passive/calm? (1 = very passive/calm, 7 = very active/aroused), (3) To what extent does the word refer to something that is weak/submissive or strong/dominant? (1 = very weak/submissive, 7 = very strong/dominant). Participants used their mouse to click on the chosen rating for each word. The full instructions in Dutch can be found in Appendix C.

Results

An average rating for each word on each dimension was calculated, and a between items ANOVA with the factor dominant modality was conducted. Items that belonged to more than one dominant modality were not included in the analysis.

There was a main effect of modality on valence, F(4, 475) = 4.66, p < .001, η 2 p = .04. As seen in Fig. 7a, however, olfactory words were not more valenced than words from other modalities. Instead, words dominant in haptics were rated as most valenced. Since the valence scale ran from very negative/unpleasant to very positive/pleasant, we transformed the ratings to reflect absolute valence, so that 0 would reflect neutral valence and 3.5 would reflect high valence (positive or negative). This time there was no effect of modality, F (4, 475) = 1.51, p = .2, η 2 p = .01, but again haptic words were most valenced (Fig. 7b).

There was a main effect of modality on arousal, F (4, 475) = 18.7, p < .001, η 2 p = .14. However, again olfactory words did not receive higher ratings of arousal than words in other dominant modalities. Instead words from the auditory category had the highest arousal values (Fig. 7c).

Ratings of dominance also differed significantly across modalities, F (4, 475) = 13.65, p < .001, η 2 p = .10. This time, although ratings were highest overall in the auditory modality, ratings for olfactory words were second highest. Power/dominance therefore appears to be the dimension on which olfactory words differ from words in the other “proximal” senses.

General discussion

In this paper, we provide modality exclusivity and emotion norms for 485 Dutch nouns. This work provides a Dutch equivalent to Lynott and Connell’s (2013) modality exclusivity norms for English nouns (although note that different words were used). Our modality ratings support the claim that concepts are in general experienced multimodally.

We found words were most dominant in the visual modality and least dominant in olfaction, and thus Dutch nouns differentially encode perceptual information (Levinson & Majid, 2014). The fact that olfaction is the least dominant could reflect the reported difficulty people have in conjuring up a mental imagery of odors (e.g., Sheehan, 1967). Words rated as visually dominant had the greatest modality exclusivity scores, meaning these concepts are less multimodal than words dominant in other modalities. Words rated as dominant in gustation had the lowest modality exclusivity scores, i.e., they were most multimodal. It is interesting to note in this regard that words dominant in gustation are most likely to be words for food (e.g., mustard, peanut butter) and their referents are typically experienced in a multimodal environment (Auvray & Spence, 2008).

A factor analysis of the modality ratings data identified two main factors. The first had strong positive loadings from olfaction and gustation, which likely reflects their joint involvement in flavor, while the second factor had strong positive loadings from vision and touch, reflecting perhaps their joint involvement when experiencing manipulable objects.

In order to validate these modality norms, we conducted a similarity rating experiment, where participants rated the similarity of the referents of word pairs. Word pairs from the same dominant modality were rated as more similar than word pairs from different modalities. Additionally, words from the same dominant modality were rated as even more similar if both words had high ratings within that modality. This suggests modality ratings do reflect salient perceptual associations of word meaning.

Going a step further, we demonstrated how the modality norms can be used in the development of experimental stimuli. Using modality categorization based on our modality rating norms, we conducted a lexical decision task, presenting words in either a near or far location on a screen. In comparison to all other modalities, we found words with olfactory dominance were processed faster in the near location than in the far location. Additionally, overall response times were faster the higher a word’s rating in the visual, gustatory, or olfactory modality, and the higher the total modality scores.

Although faster processing of olfactory dominant words in the near position than the far position was predicted according to the proximal-distal dichotomy, differences between olfactory dominant and both haptic and gustatory dominant words were not predicted. All three modalities are typically experienced in relatively close proximity to the body; in fact, taste and touch cannot be experienced from a distance. Based on this real-world experience, they should be simulated as close to the body. Yet we only found facilitation in the near presentation for words dominant in olfaction.

There are certain qualities of olfaction that diverge from those of the other modalities that could explain the pattern of responses for olfactory dominant words. In Western societies, smell is typically undervalued; individuals find it very difficult to name odors, and mental imagery of odors is difficult (e.g., Cain, 1979; Crowder & Schab, 1995; de Wijk, Schab, & Cain, 1995; Majid & Burenhult, 2014). This suggests semantic information for olfaction is weak. One could therefore hypothesize that smell would subsequently be harder to simulate in the distance than nearby since things in the distance are harder to perceive. Alternatively, smelling an odor involves sniffing, or inhaling, which is an active intake of odorous molecules into the body. From this point of view then, odors could be conceptualized as closer to the body, becoming internalized as they are smelled. Taste, on the other hand, is distinct from swallowing (although, of course, taste does involve putting something inside the mouth).

Odor is thought to be strongly associated with emotion (e.g., Larsson, Willander, Karlsson, & Arshamian, 2014; Soudry, Lemogne, Malinvaud, Consoli, & Bonfils, 2011; Yeshurun & Sobel, 2010), suggesting words dominant in olfaction may be loaded with more emotional information. So, one could predict differences in responses may be due to emotion. For example, more emotional words may be facilitated closer to the body, because emotions are personal and associated with the body. In order to investigate the role of emotion in performance in the experiment, we collected a set of emotion norms for all nouns, including ratings of valence, arousal, and dominance (Study 4). This data also provides important norms for the study of emotion in language in the future (Majid, 2012). Contrary to expectations, the valence ratings we collected did not show olfactory words were more valenced, so this cannot explain the differences we found in Study 3. Olfactory words did, however, receive higher ratings of “dominance” than haptic and gustatory words. Dominance involves “appraisal of control, leading to feelings of power or weakness, interpersonal dominance or submission, including impulses to act or refrain from action” (Fontaine, Scherer, Bosch, & Ellsworth, 2007). One could provide a post-hoc explanation as to why word referents involving more control or power could be processed faster in near space than in far space, yet the opposite explanation could perhaps also be satisfactory. Moreover, since words dominant in audition were rated highest in dominance but did not pattern with olfactory words in Study 3, we do not believe this is a relevant variable.

Another finding was that words with stronger visual associations had faster responses. This is in line with Lynott & Connell’s (2014) finding from a lexical decision task showing visually-dominant words were facilitated relative to words with strong auditory associations. Connell and Lynott suggested responses to words with visual associations are faster because the lexical decision task engages visual attention. To our knowledge, our study is the first to demonstrate facilitation of word processing for strong gustatory and olfactory associations. One can speculate why this facilitation would be observed. Words with strong gustatory and olfactory ratings are most likely to be words denoting food and drink, and are therefore things humans desire, leading to approach behavior. In line with this, research has shown more tempting food words (e.g., chips rather than rice) lead to simulation of eating behavior (Papies, 2013), and simple food cues can lead to eating simulation that potentially motivates its consumption (Chen, Papies, & Barsalou, 2016). This proposal awaits further confirmation in the future.

However, another explanation for the facilitation of olfactory responses could lie in emotional valence. As described above, olfaction is strongly linked with emotion. Research has shown valenced words are processed faster (Kousta, Vinson, & Vigliocco, 2009). Similarly, taste pleasantness also leads to faster lexical decision times (Amsel, Urbach, & Kutas, 2012). However, as can be observed in the valence ratings (Study 4), words dominant in haptics were, in fact, more positively valenced than gustatory and olfactory words, so this cannot fully explain the overall facilitation of gustatory and olfactory words.

The finding that a higher total modality score leads to faster responses supports studies finding greater semantic richness (i.e., more semantic features) leads to enhanced conceptual activation which facilitates lexical access, as measured by lexical decision performance and naming (Pexman, Lupker, & Hino, 2002; Rabovsky, Schad, & Abdel Rahman, 2016), and facilitates implicit word learning (Rabovsky, Sommer, & Abdel Rahman, 2012). In the present study, one could interpret a higher totality modality score as reflecting a greater number of perceptual features (see Pexman, Holyk, & Monfils, 2003 for discussion of how a greater number of perceptual symbols could facilitate processing). On the other hand, a greater number of perceptual associations is not necessarily an indicator of more semantic features in general, as they reflect only one component of semantic richness. For example, concepts can also differ in the number of abstract features they have. It could be concluded, then, that greater modality associations facilitate processing, at least for concrete nouns.

In terms of the theoretical contribution of our experiment, one could argue that lexical decision is not the most suitable task to observe mental simulation effects, as it does not strongly require semantic access. Thus, stronger effects of modality could be observed in a more explicit semantic task, using semantic judgments (e.g., “is this an object?”). Another interesting speculation is that information in specific modalities may become more or less relevant depending on the context (e.g., van Dam, van Dijk, Bekkering, & Rueschemeyer, 2012). For example, we might expect stronger effects of presentation distance on odor words if they were to be judged on food-related qualities, or if participants had specific olfactory-related experiences, such as that of chefs or perfumers. Averaging across experience, certain modalities may be experienced more often in near or far space, but there may still be differences within a modality category. For example, smoke can be smelled from a distance but cumin cannot, yet they are both from the olfactory category. It is therefore likely that mental simulation of distance for perceptual modality is a less robust relationship than, for example, simulation of spatial height based on real-world location (e.g., Dudschig, Souman, Lachmair, de la Vega, & Kaup, 2013; Estes, Verges, & Barsalou, 2008).

Conclusion

When we read words, information from multiple perceptual modalities is activated, and this information is important for the simulation of concepts. We use our senses differently in space, and this experience affects the spatial nature of mental simulations. Our results provide the first multimodal ratings for Dutch nouns and highlight the importance of multimodal information for word meanings. Words appear to be processed differently depending on the perceptual information associated with the concept. In particular, words with strong associations with visual, gustatory, or olfactory information were processed faster than words dominant in the other perceptual modalities, even after controlling for frequency. Moreover, processing of words with greater perceptual associations overall was facilitated.

The spatial location of word presentation was also important for words dominant in olfactory associations, with processing faster in proximal space than distal space. This suggests words with olfactory associations are mentally simulated near to the body, reflecting the way in which odor is experienced in the world. In sum, our results illustrate that ratings of modality exclusivity capture important experiential information related to concepts that affect the way words are processed. Differences across the senses lead to differences in mental simulation of word meanings.

Notes

Note that there is another option for HEAR in BSL which occurs closer to the head.

Participant slopes for word category were included, but the models including random slopes by participant for distance did not converge. In addition, models including item random slopes did not converge.

For this analysis the models with random slopes did not converge so only random intercepts were included.

References

Amsel, B. D., Urbach, T. P., & Kutas, M. (2012). Perceptual and motor attribute ratings for 559 object concepts. Behavior Research Methods, 44(4), 1028–1041. doi:10.3758/s13428-012-0215-z

Auvray, M., & Spence, C. (2008). The multisensory perception of flavor. Consciousness and Cognition, 17(3), 1016–1031. doi:10.1016/j.concog.2007.06.005

Barros-Loscertales, A., Gonzalez, J., Pulvermüller, F., Ventura-Campos, N., Bustamante, J., Costumero, C. V., Parcet, M. A., & Avila, C. (2012). Reading salt activates gustatory brain regions: fMRI evidence for semantic grounding in a novel sensory modality. Cerebral Cortex, 22(11), 2554–63. doi:10.1093/cercor/bhr324

Barsalou, L. W. (1999). Perceptual symbol systems. Behavioral and Brain Sciences, 22(4), 577–660. doi:10.1017/S0140525X99532147

Bates, D., Maechler, M., Bolker, B., &Walker, S. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software 67(1), 1-48. doi:10.18637/jss.v067.i01

Cain, W. S. (1979). To know with the nose: Keys to odor identification. Science, 203, 467–470. doi:10.1126/science.760202

Chen, J., Papies, E. K., & Barsalou, L. W. (2016). A core eating network and its modulations underlie diverse eating phenomena. Brain and Cognition, 110, 20–42.

Connell, L., & Lynott, D. (2014). I see/hear what you mean: Semantic activation in visual word recognition depends on perceptual attention. Journal of Experimental Psychology: General, 143(2), 527–533. doi:10.1037/a0034626

Cowey, A., Small, M., & Ellis, S. (1994). Left visuo-spatial neglect can be worse in far than in near space. Neuropsychologia, 32(9), 1059–1066. doi:10.1016/0028-3932(94)90152-X

Crowder, R. G., & Schab, F. R. (1995). Imagery for odors. In F. R. Schab & R. G. Crowder (Eds.), Memory for odors (pp. 93–107). Mahwah: Erlbaum.

Cytowic, R. E. (1995). Synesthesia: Phenomenology and neuropsychology. Psyche, 2(10), 2–10.

de Wijk, R. A., Schab, F. R., & Cain, W. S. (1995). Odor identification. In F. R. Schab & R. G. Crowder (Eds.), Memory for odors (pp. 21–37). Mahwah: Erlbaum.

Diessel, H. (1999). Demonstratives. Form, function and grammaticalization. Amsterdam: John Benjamins Publishing Company.

Dudschig, C., Souman, J., Lachmair, M., de la Vega, I., & Kaup, B. (2013). Reading “sun” and looking up: The influence of language on saccadic eye movements in the vertical dimension. PLoS ONE, 8(2), e56872. doi:10.1371/journal.pone.0056872

Estes, Z., Verges, M., & Barsalou, L. W. (2008). Head up, foot down object words orient attention to the objects’ typical location. Psychological Science, 19(2), 93–97. doi:10.1111/j.1467-9280.2008.02051.x

Fontaine, J. R., Scherer, K. R., Roesch, E. B., & Ellsworth, P. C. (2007). The world of emotions is not two-dimensional. Psychological Science, 18(12), 1050–1057. doi:10.1111/j.1467-9280.2007.02024.x

Glenberg, A. M., & Kaschak, M. P. (2002). Grounding language in action. Psychonomic Bulletin & Review, 9(3), 558–65. doi:10.3758/BF03196313

Gonzalez, J., Barros-Loscertales, A., Pulvermüller, F., Meseguer, V., Belloch, V., & Avilia, C. (2006). Reading cinnamon activates olfactory brain regions. NeuroImage, 32(2), 906–912. doi:10.1016/j.neuroimage.2006.03.037

Halligan, P. W., & Marshall, J. C. (1991). Left neglect for near but not far space in man. Nature, 350, 498–500. doi:10.1038/350498a0

Howes, D. (2003). Sensual relations: Engaging the senses in culture and social theory. Ann Arbor: University of Michigan Press.

Kemmerer, D. (1999). “Near” and “far” in language and perception. Cognition, 73(1), 35–63. doi:10.1016/S0010-0277(99)00040-2

Keuleers, E., & Brysbaert, M. (2010). Wuggy: A multilingual pseudoword generator. Behavior Research Methods, 42(3), 627–633. doi:10.3758/BRM.42.3.627

Kiefer, M., Sim, E.-J., Herrnberger, B., Grothe, J., & Hoenig, K. (2008). The sound of concepts: Four markers for a link between auditory and conceptual brain systems. The Journal of Neuroscience, 28(47), 12224–12230. doi:10.1523/JNEUROSCI.3579-08.2008

Kousta, S.-T., Vinson, D. P., & Vigliocco, G. (2009). Emotion words, regardless of polarity, have a processing advantage over neutral words. Cognition, 112(3), 473–481. doi:10.1016/j.cognition.2009.06.007

Krifka, M. (2010). A note on an asymmetry in the hedonic implicatures of olfactory and gustatory term. In S. Fuchs, P. Hoole, C. Mooshammer, & M. Zygis (Eds.), Between the regular and the particular in speech and language (pp. 235–45). Frankfurt: Peter Lang.

Landau, I. (2010). The locative syntax of experiencers. Cambridge: MIT Press.

Larsson, M., Willander, J., Karlsson, K., & Arshamian, A. (2014). Olfactory LOVER: Behavioral and neural correlates of autobiographical odor memory. Frontiers in Psychology, 5, 1–5. doi:10.3389/fpsyg.2014.00312

Levinson, S. C., Cutfield, S. Dunn, M., Enfield, N., Meira, S., & Wilkins, D. (in press). Demonstratives in cross-linguistic perspective. Cambridge: Cambridge University Press.

Levinson, S. C., & Majid, A. (2014). Differential ineffability and the senses. Mind & Language, 29, 407–427. doi:10.1111/mila.12057

Louwerse, M., & Connell, L. (2011). A taste of words: Linguistic context and perceptual simulation predict the modality of words. Cognitive Science, 35(2), 381–398. doi:10.1111/j.1551-6709.2010.01157.x

Lynott, D., & Connell, L. (2009). Modality exclusivity norms for 423 object properties. Behavior Research Methods, 41(2), 558–564. doi:10.3758/BRM.41.2.558

Lynott, D., & Connell, L. (2013). Modality exclusivity norms for 400 nouns: The relationship between perceptual experience and surface word form. Behavior Research Methods, 45(2), 516–526. doi:10.3758/s13428-012-0267-0

Majid, A. (2012). Current emotion research in the language sciences. Emotion Review, 4, 432–443. doi:10.1177/1754073912445827

Majid, A., & Burenhult, N. (2014). Odors are expressible in language, as long as you speak the right language. Cognition, 130(2), 266–270. doi:10.1016/j.cognition.2013.11.004

Majid, A., & Levinson, S. C. (2011). The senses in language and culture. The Senses and Society, 6(1), 5–18. doi:10.2752/174589311X12893982233551

Meteyard, L., Cuadrado, S. R., Bahrami, B., & Vigliocco, G. (2012). Coming of age: A review of embodiment and the neuroscience of semantics. Cortex, 48(7), 788–804. doi:10.1016/j.cortex.2010.11.002

Moors, A., De Houwer, J., Hermans, D., Wanmaker, S., van Schie, K., Van Harmelen, A.-L., ... Brysbaert, M. (2013). Norms of valence, arousal, dominance, and age of acquisition for 4,300 Dutch words. Behavior Research Methods, 45(1), 169–177. doi:10.3758/s13428-012-0243-8

Mulatti, C., Treccani, B., & Job, R. (2014). The role of the sound of objects in object identification: Evidence from picture naming. Frontiers in Psychology, 5, 1139. doi:10.3389/fpsyg.2014.01139

Nevo, O., & Heymann, E. W. (2015). Led by the nose: Olfaction in primate feeding ecology. Evolutionary Anthropology, 24(4), 137–148. doi:10.1002/evan.21458

Papies, E. K. (2013). Tempting food words activate eating simulations. Frontiers in Psychology, 4, 838. doi:10.3389/fpsyg.2013.00838

Pecher, D., Zeelenberg, R., & Barsalou, L. W. (2003). Verifying different-modality properties for concepts produces switching costs. Psychological Science, 14(2), 119–124. doi:10.1111/1467-9280.t01-1-01429

Pexman, P. M., Lupker, S. J., & Hino, Y. (2002). The impact of feedback semantics in visual word recognition: Number-of-features effects in lexical decision and naming tasks. Psychonomic Bulletin & Review, 9(3), 542–549. doi:10.3758/BF03196311

Pexman, P. M., Holyk, G. G., & Monfils, M.-H. (2003). Number-of-features effects and semantic processing. Memory & Cognition, 31(6), 842–855. doi:10.3758/BF03196439

Rabovsky, M., Sommer, W., & Abdel Rahman, R. (2012). Implicit word learning benefits from semantic richness: Electrophysiological and behavioral evidence. Journal of Experimental Psychology: Learning, Memory, and Cognition, 38(4), 1076–1083. doi:10.1037/a0025646

Rabovsky, M., Schad, D. J., & Abdel Rahman, R. (2016). Language production is facilitated by semantic richness but inhibited by semantic density: Evidence from picture naming. Cognition, 146, 240–244. doi:10.1016/j.cognition.2015.09.016

San Roque, L., Kendrick, K. H., Norcliffe, E. J., Brown, P., Defina, R., Dingemanse, M., ... Majid, A. (2015). Vision verbs dominate in conversation across cultures, but the ranking of non-visual verbs varies. Cognitive Linguistics, 26(1), 31–60. doi:10.1515/cog-2014-0089

Sheehan, P. W. (1967). A shortened form of Betts’ questionnaire upon mental imagery. Journal of Clinical Psychology, 23(3), 386–389. doi:10.1002/1097-4679(196707)23:3<386::AID-JCLP2270230328>3.0.CO;2-S

Simmons, W. K., Ramjee, V., Beauchamp, M. S., McRae, K., Martin, A., & Barsalou, L. W. (2007). A common neural substrate for perceiving and knowing about color. Neuropsychologia, 45(12), 2802–2810. doi:10.1016/j.neuropsychologia.2007.05.002

Soudry, Y., Lemogne, C., Malinvaud, D., Consoli, S.-M., & Bonfils, P. (2011). Olfactory system and emotion: Common substrates. European Annals of Otorhinolaryngology, Head and Neck Diseases, 128(1), 18–23. doi:10.1016/j.anorl.2010.09.007

Speed, L. J., & Vigliocco, G. (2015). Space, time and speed in language and perception. In Y. Coello & M. Fischer (Eds.), Foundations of embodied cognition. New York: Psychology Press.

Stanfield, R. A., & Zwaan, R. A. (2001). The effect of implied orientation derived from verbal context on picture recognition. Psychological Science, 12(2), 153–156. doi:10.1111/1467-9280.00326

Van Dam, W. O., van Dijk, M., Bekkering, H., & Rueschemeyer, S.-A. (2012). Flexibility in embodied lexical-semantic representations. Human Brain Mapping, 33(10), 2322–2333. doi:10.1002/hbm.21365

Van Dantzig, S., Cowell, R. A., Zeelenberg, R., & Pecher, D. (2011). A sharp image or a sharp knife: Norms for the modality-exclusivity of 774 concept-property items. Behavior Research Methods, 43(1), 145–154. doi:10.3758/s13428-010-0038-8

Winter, B. (2013). Linear models and linear mixed effects models in R with linguistic applications. arXiv:1308.5499. [http://arxiv.org/pdf/1308.5499.pdf]

Winter, B. (2016). Taste and smell words form an affectively loaded part of the English lexicon. Language, Cognition and Neuroscience, 31(8), 975–988.

Winter, B., & Bergen, B. (2012). Language comprehenders represent object distance both visually and auditorily. Language and Cognition, 4(1), 1–16.

Yeshurun, Y., & Sobel, N. (2010). An odor is not worth a thousand words: From multidimensional odors to unidimensional odor objects. Annual Review of Psychology, 61, 219–241. doi:10.1146/annurev.psych.60.110707.163639

Acknowledgements

We thank Hannah Atkinson for help with stimuli development, Gerardo Ortega for help with BSL translations, and Bodo Winter and Diane Pecher for their insightful comments. This research was supported by The Netherlands Organization for Scientific Research: NWO VICI grant “Human olfaction at the intersection of language, culture and biology.”

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

ESM 1

(XLSX 63 kb)

Appendices

Appendix A

Instructions

Procedure

Appendix B

Broad category analysis

Model1 = lmer(RT ~ BroadCategory + Distance + Length + CelexFreq + SubtlexFreq + SpokenDutchFreq +(1+BroadCategory |Subject) + (1|Word), RTdata, REML=FALSE)

Model2= lmer(RT ~ BroadCategory*Distance + Length + CelexFreq + SubtlexFreq + SpokenDutchFreq + (1+BroadCategory |Subject) + (1|Word), RTdata, REML=FALSE)

Dominant category analysis

Model1= lmer(RT ~ Dominant + Distance + Length + CelexFreq + SubtlexFreq + SpokenDutchFreq +(1 |Subject) + (1|Word), RTdata, REML=FALSE)

Model2 = lmer(RT ~ Dominant*Distance + Length + CelexFreq + SubtlexFreq + SpokenDutchFreq +(1 |Subject) +(1|Word), RTdata, REML=FALSE)

Simple models

Model1 = lmer(RT ~ Length + CelexFreq + SubtlexFreq + SpokenDutchFreq + (1 |Subject) + (1|Word), RTdata, REML=FALSE)

Model2 = lmer(RT ~ ModalityRating + Length + CelexFreq + SubtlexFreq + SpokenDutchFreq + + (1+ModalityRating|Subject) + (1|Word), RTdata, REML=FALSE)

Appendix C. Instructions for valence, dominance, and arousal ratings

In deze taak krijgt u woorden om te beoordelen op drie dimensies. De drie dimensies worden hieronder beschreven

Valentie/ Aangenaamheid Conditie

In welke mate verwijst het woord naar iets dat positief/aangenaam is, of negatief/onaangenaam is? (1 = zeer negatief/onaangenaam, 2 = redelijk negatief/onaangenaam, 3 = een beetje negatief/onaangenaam, 4 = neutraal, 5 = een beetje positief/aangenaam, 6 = redelijk positief/aangenaam, 7 = zeer positief/aangenaam).

Als u vindt dat “gevangenis” een zeer negatieve betekenis heeft, kies dan voor 1. Als u vindt dat “geluk” een zeer positieve betekenis heeft, kies dan voor 7. Als u vindt dat “spruiten” verwijst naar iets dat redelijk onaangenaam is, kies dan voor 2. Als u vindt dat “vakantie” verwijst naar iets dat redelijk aangenaam is, kies dan voor 6.

Actief/opwekkend conditie

In welke mate verwijst het woord naar iets dat actief/opwekkend is, of passief/kalmerend is? (1 = zeer passief/kalmerend, 2 = redelijk passief/kalmerend, 3 = een beetje passief/kalmerend, 4 = neutraal, 5 = een beetje actief/opwekkend, 6 = redelijk actief/opwekkend, 7 = zeer actief/opwindend).

Als u vindt dat “hangmat” een redelijk passieve betekenis heeft, kies dan voor 2. Als u vindt dat “werk” een redelijk actieve betekenis heeft, kies dan voor 6. Als u vindt dat “meditatie” een zeer kalmerende betekenis heeft, kies dan voor 1. Als u vindt dat “energie” een zeer opwekkend betekenis heeft, kies dan voor 7.

Kracht/dominantie conditie

In welke mate verwijst het woord naar iets dat zwak/onderdanig is, of sterk/dominant? (1 = zeer zwak/onderdanig, 2 = redelijk zwak/onderdanig, 3 = een beetje zwak/onderdanig, 4 = neutraal, 5 = een beetje sterk/dominant, 6 = redelijk sterk/dominant, 7 = zeer sterk/dominant)

Als u vindt dat “grasspriet” verwijst naar iets dat zeer zwak is, kies dan voor 1. Als u vindt dat “lawine” verwijst naar iets dat zeer sterk is, kies dan voor 7. Als u vindt dat “bediende” een redelijk onderdanige betekenis heeft, kies dan voor 2. Als u vindt dat “wraak” een redelijk dominante betekenis heeft, kies dan voor 6.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Speed, L.J., Majid, A. Dutch modality exclusivity norms: Simulating perceptual modality in space. Behav Res 49, 2204–2218 (2017). https://doi.org/10.3758/s13428-017-0852-3

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-017-0852-3